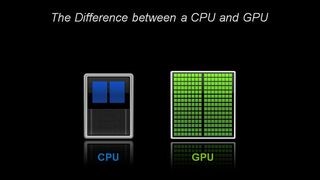

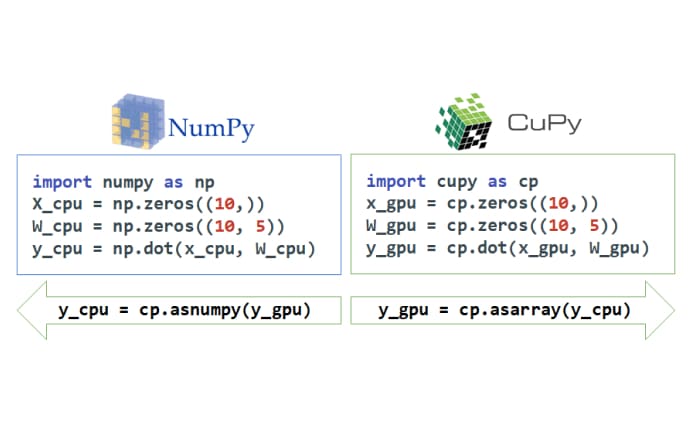

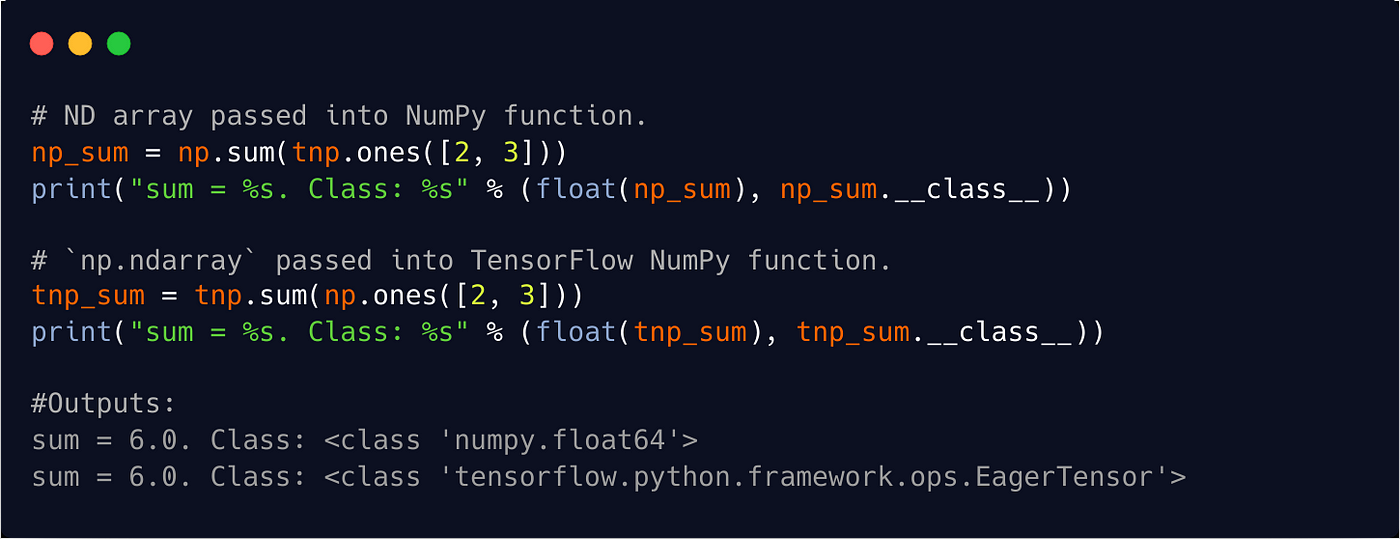

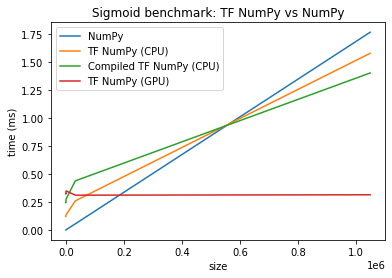

Numpy on GPU/TPU. Make your Numpy code to run 50x faster. | by Sambasivarao. K | Analytics Vidhya | Medium

Backpropagation fails after moving tensor from GPU to CPU (numpy version) - autograd - PyTorch Forums

performance - Why is numpy.dot as fast as these GPU implementations of matrix multiplication? - Stack Overflow

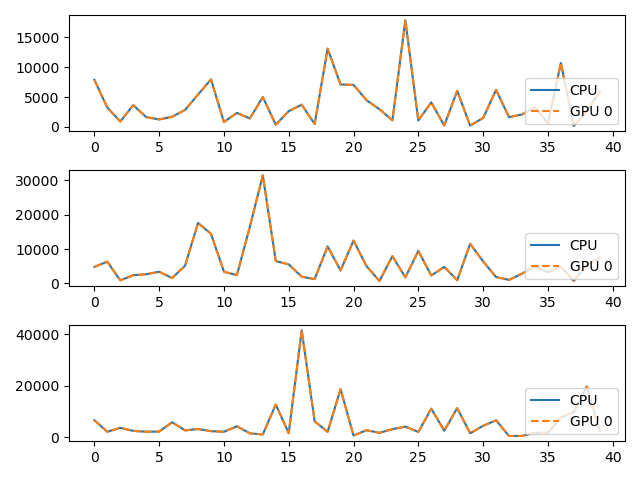

Numpy on GPU/TPU. Make your Numpy code to run 50x faster. | by Sambasivarao. K | Analytics Vidhya | Medium