NVIDIA vComputeServer with NGC Containers Brings GPU Virtualization to AI, Deep Learning and Data Science

From Deep Learning to Next-Gen Visualization: A GPU-Powered Digital Transformation | NVIDIA GTC 2019

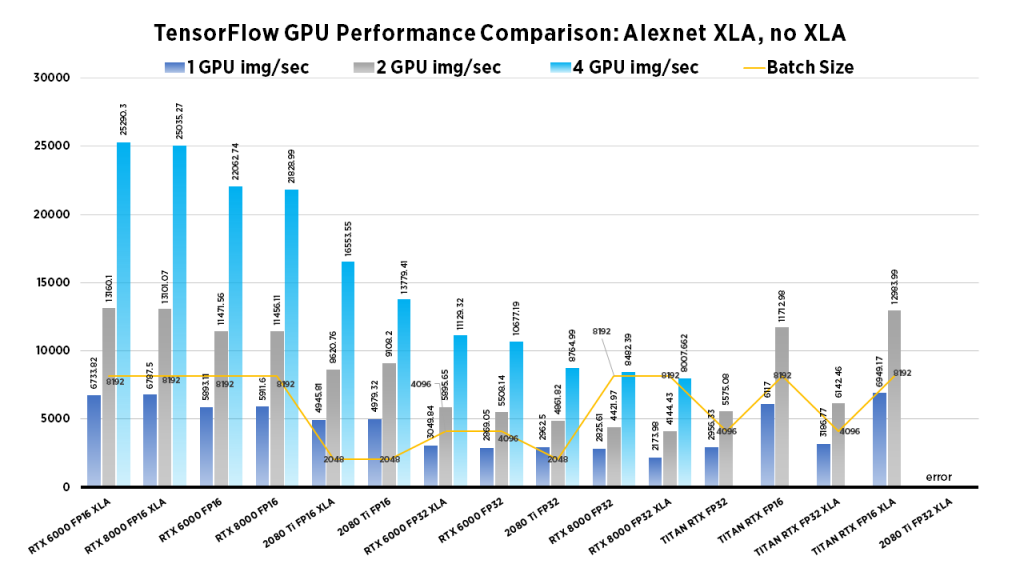

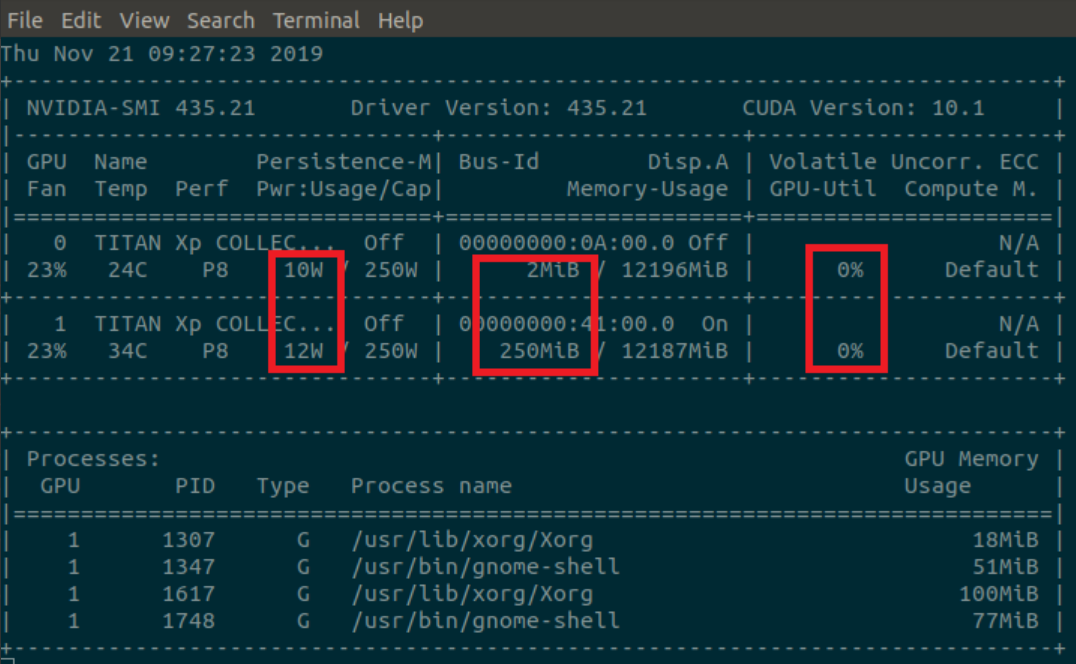

Setting Up a Multi-GPU Machine and Testing With a TensorFlow Deep Learning Model | by Thomas Gorman | Analytics Vidhya | Medium

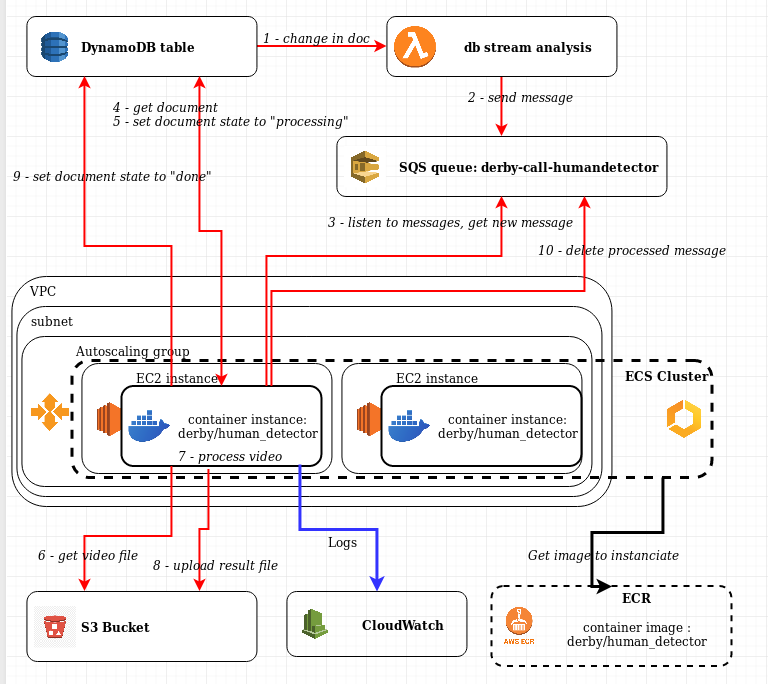

Deploying on AWS a container-based application with deep learning on GPU - Xenia Conseil - Cyril Poulet

Monitor and Improve GPU Usage for Training Deep Learning Models | by Lukas Biewald | Towards Data Science